| Start | Course | Wiki |

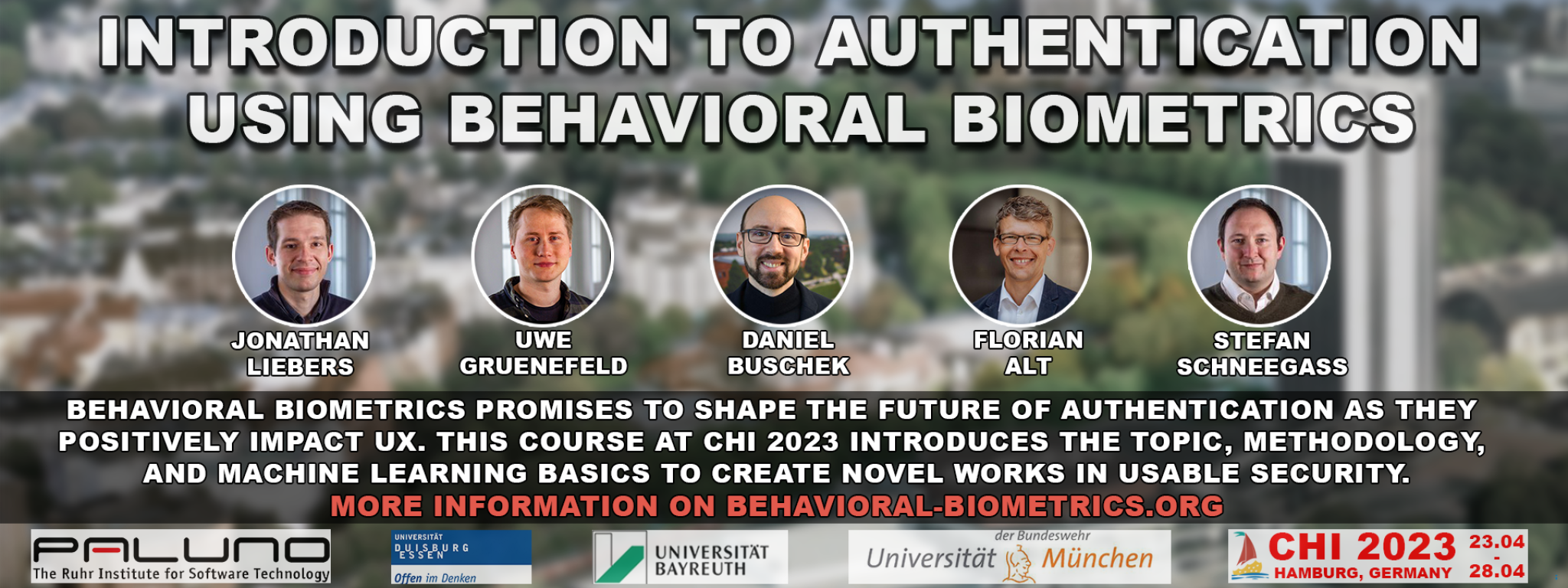

Course at CHI 2023

Welcome to the website of our Course at CHI 2023. On these pages, you can find key information on the course and the course contents. A wiki with the course notes and adjunct information follows soon and is currently in the work. If you have any questions, please feel free to reach out via our e-mail address.

Key information

Time: Tuesday, 25. April 2023, 14:30 - 15:55 and 16:35 - 18:00.

Room: X04

Course Contents

This course covers the topic of biometric authentication through behavioral biometrics. To be more precise, it will cover the following aspects:

- Introduction to Biometric Authentication

- Alternatives to Password and Authentication Fatigue

- Future Authentication: Implicit & Continuous

- Behavioral Biometric Modalities

- Foundations of Biometric Authentication Systems (Enrolment, Verification, Identification)

- How to implement a Biometric Matching Module using Machine Learning

- Metrics and Evaluation: From Study Design over Biometric Stability to Metrics